Parallel tool calling

We've added support for parallel tool calls in our Editor and API.

With the release of the latest OpenAI turbo models, the model can choose to respond with more than one tool call for a given query; this is referred to as parallel tool calling.

Editor updates

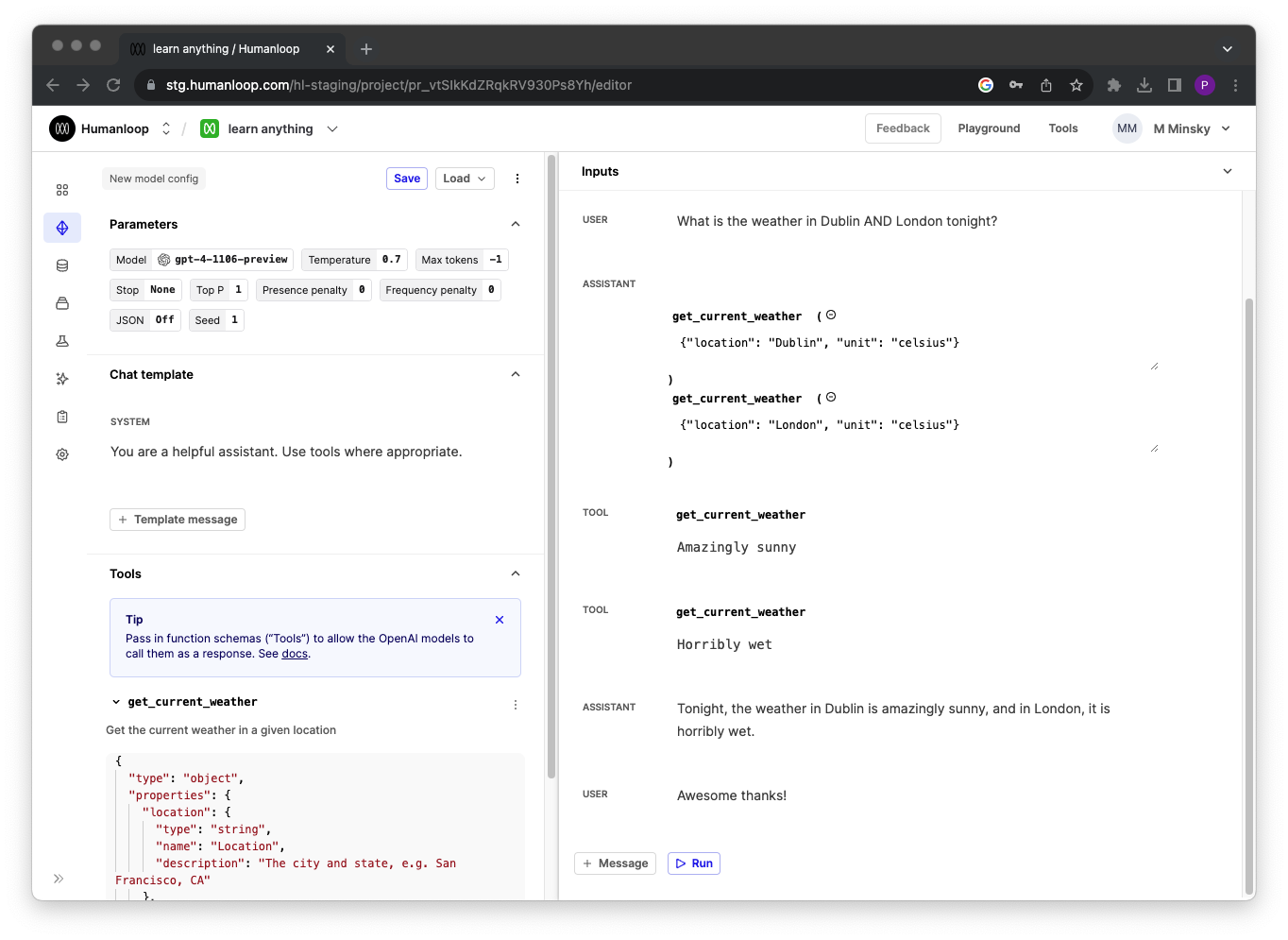

You can now experiment with this new feature in our Editor:

- Select one of the new turbo models in the model dropdown.

- Specify a tool in your model config on the left hand side.

- Make a request that would require multiple calls to answer correctly.

- As shown here for a weather example, the model will respond with multiple tool calls in the same message

API implications

We've added an additional field tool_calls to our chat endpoints response model that contains the array of tool calls returned by the model. The pre-existing tool_call parameter remains but is now marked as deprecated.

Each element in the tool_calls array has an id associated to it. When providing the tool response back to the model for one of the tool calls, the tool_call_id must be provided, along with role=tool and the content containing the tool response.

from humanloop import Humanloop

# Initialize the Humanloop SDK with your API Keys

humanloop = Humanloop(api_key="<YOUR Humanloop API KEY>")

# form of message when providing the tool response to the model

chat_response = humanloop.chat_deployed(

project_id="<YOUR PROJECT ID>",

messages: [

{

"role": "tool",

"content": "Horribly wet"

"tool_call_id": "call_dwWd231Dsdw12efoOwdd"

}

]

)