Finetune a model

In this guide we will demonstrate how to use Humanloop’s fine-tuning workflow to produce improved models leveraging your user feedback data.

Prerequisites

- You already have a project created - if not, please pause and first follow our project creation guides.

- You have integrated

humanloop.complete()(or equivalentchatorlogmethods) andhumanloop.feedback()with the API or Python SDK.

A common question is how much data do I need to fine-tune effectively? Here we can reference the OpenAI guidelines:

The more training examples you have, the better. We recommend having at least a couple hundred examples. In general, we've found that each doubling of the dataset size leads to a linear increase in model quality.

The first part of finetuning is to select the data you wish to finetune on.

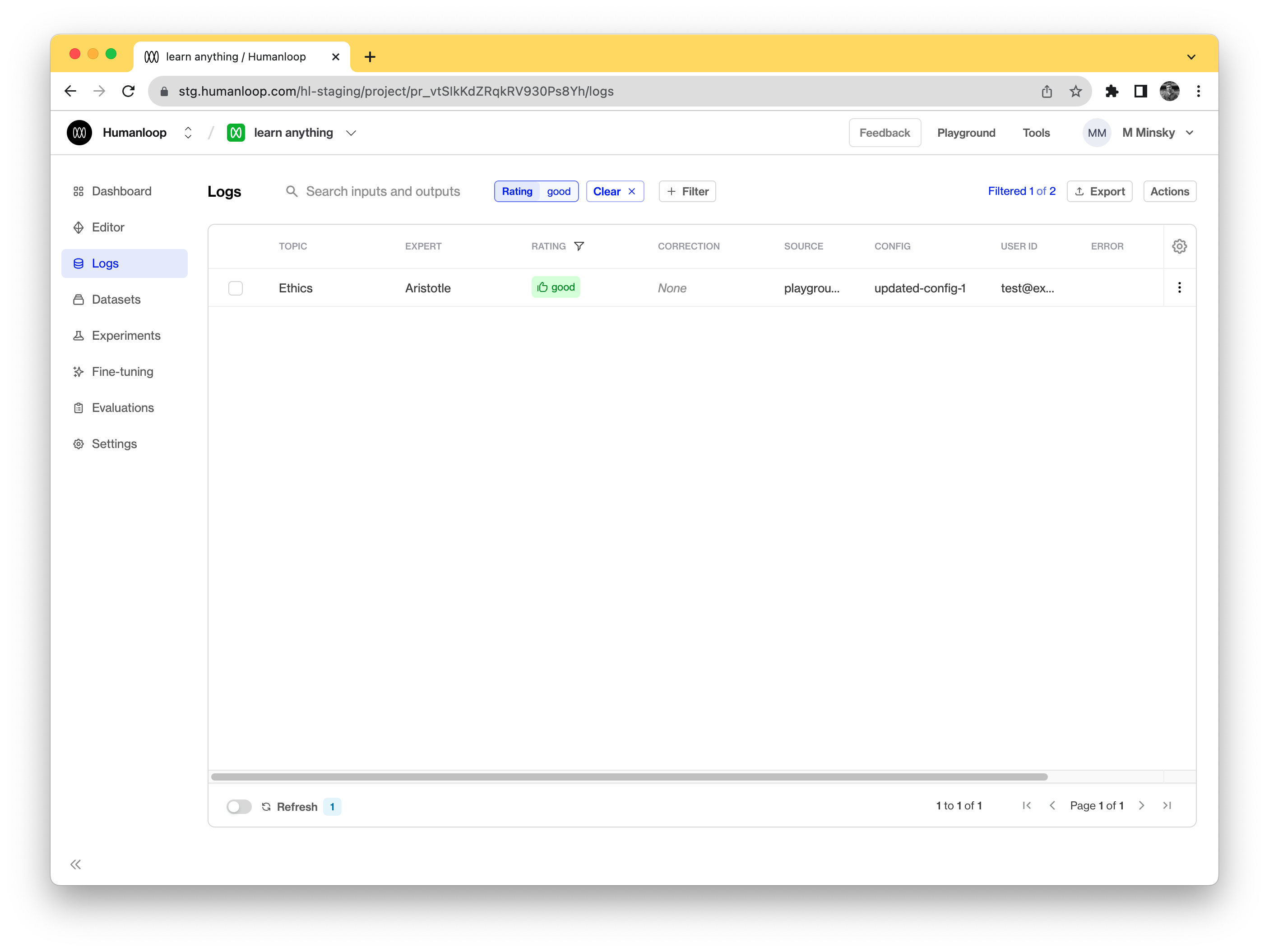

- Go to your Humanloop project and navigate to Logs tab.

- Create a filter (using the + Filter button above the table) of the logs you would like to fine-tune on.

- For example, all the logs that have received a positive upvote in the feedback captured from your end users.

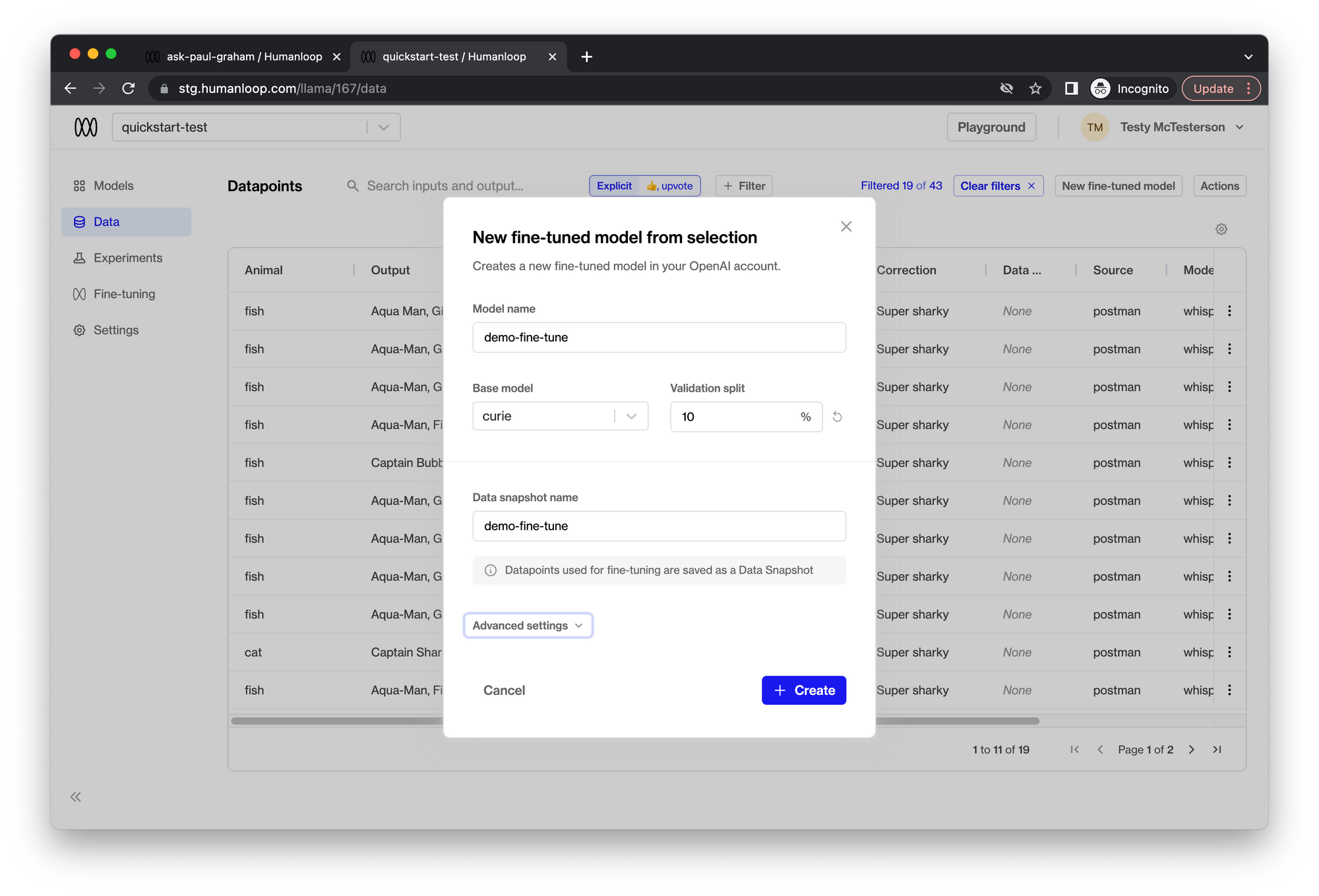

- Click the Actions button, then click the New fine-tuned model button to set up the finetuning process.

- Enter the appropriate parameters for the finetuned model.

- Enter a Model name. This will be used as the suffix parameter in OpenAI’s finetune interface. For example, a suffix of "custom-model-name" would produce a model name like

ada:ft-your-org:custom-model-name-2022-02-15-04-21-04. - Choose the Base model to finetune. This can be

ada,babbage,curie, ordavinci. - Select a Validation split percentage. This is the proportion of data that will be used for validation. Metrics will be periodically calculated against the validation data during training.

- Enter a Data snapshot name. Humanloop associates a data snapshot to every fine-tuned model instance so it is easy to keep track of what data is used (you can see yourexisting data snapshots on the Settings/Data snapshots page)

- Enter a Model name. This will be used as the suffix parameter in OpenAI’s finetune interface. For example, a suffix of "custom-model-name" would produce a model name like

- Click Create. The fine-tuning process runs asynchronously and may take up to a couple of hours to complete depending on your data snapshot size.

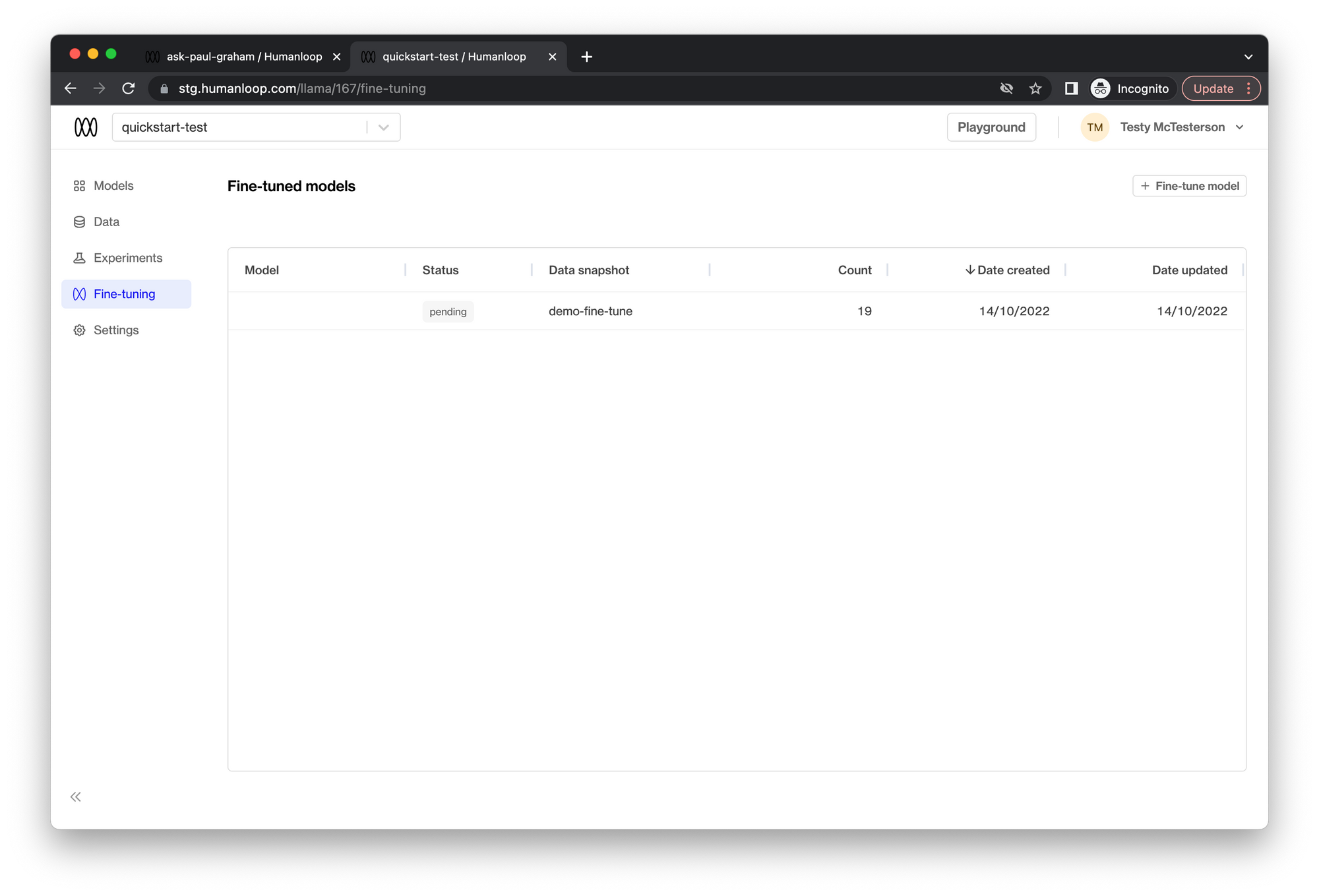

- Navigate to the Fine-tuning tab to see the progress of the fine-tuning process.

Coming soon - notifications for when your fine-tuning jobs have completed.

- When the Status of the finetuned model is marked as Successful, the model is ready to use.

🎉 You can now include this finetuned model in a new model config for your project to evaluate its performance. You can use the Playground or SDK in order to achieve this.

Updated 6 months ago