Integrate Tools

Improve your LLMs with external data sources and integrations

What are tools?

Humanloop Tools can be broken down into two different categories:

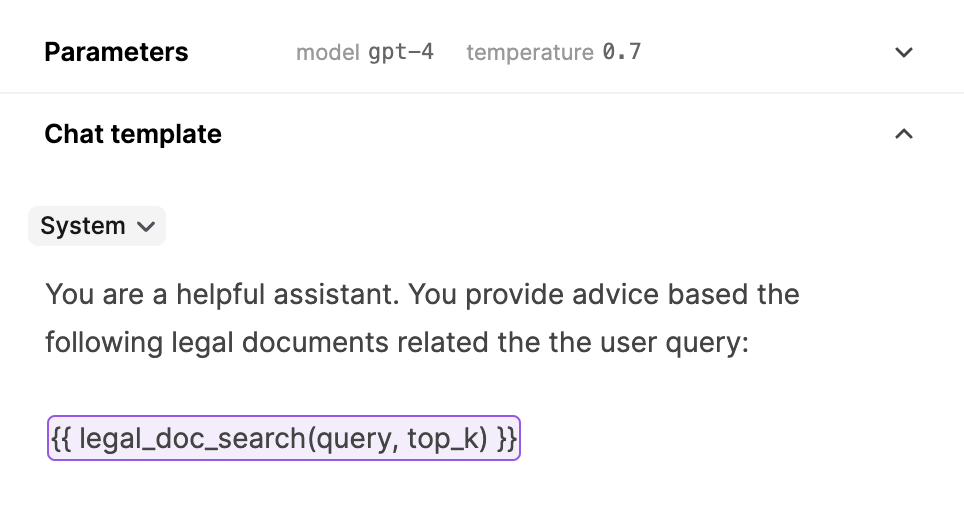

- Ways to integrate services or data sources into prompts. Integrating tools into your prompts allows you to fetch information to pass into your LLMs calls. We support both external API integrations as well as integrations within Humanloop.

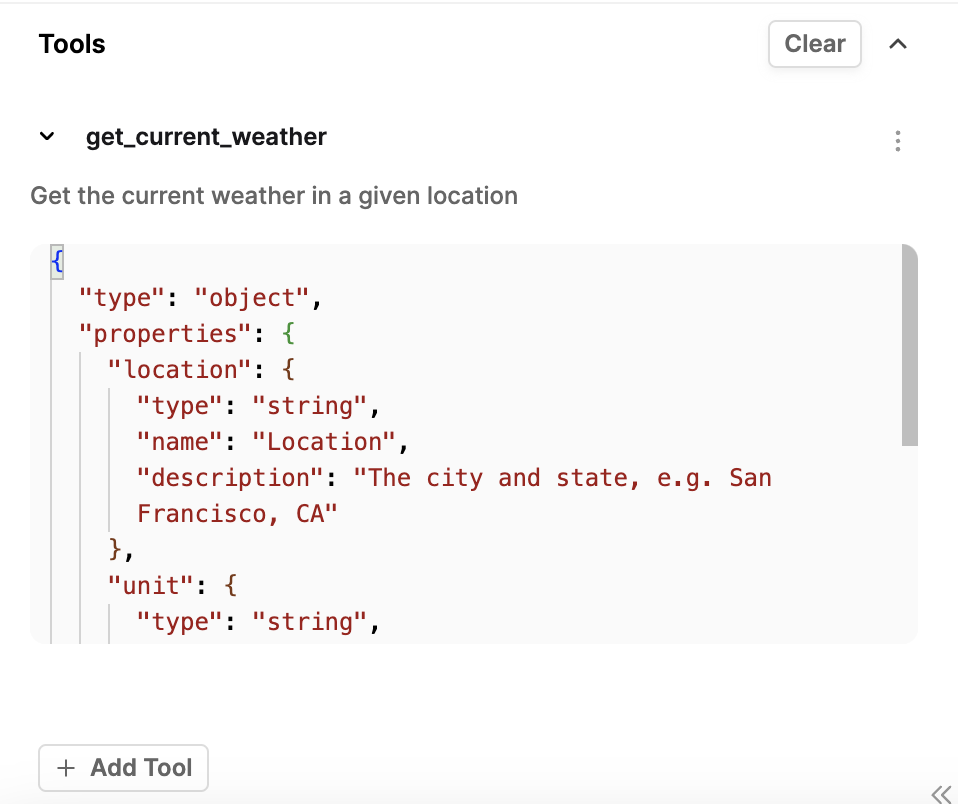

- A process to specify to an LLM the expected request/response model, think OpenAI Function calling. These use the universal JSON Schema syntax and follow OpenAI's function calling convention.

If you have a tool call in the prompt e.g. {{ google("population of india") }}, this will get executed and replaced with the resulting text before the prompt is sent to the model. If your prompt contains an input variable {{ google(query) }} this will take the input that is passed into the LLM call.

Tools for function calling can be defined inline in our Editor or centrally managed for an organization, and follow OpenAI's function calling convention.

Supported Tools

Third-party integrations

- Pinecone Search - Vector similarity search using Pinecone vector DB and OpenAI embeddings.

- Google Search - API for searching Google: https://serpapi.com/.

- GET API - Send a GET request to an external API.

Humanloop tools

- Snippet - Create reusable key/value pairs for use in prompts.

- JSON Schema - JSON schema for tool calling that can be shared across model configs.

Updated 4 months ago